#Airflow dag bag code#

To view DAG code you can click on code.If you click on it a new window will open. When Airflow attempts to import the DAG, I cannot find any log messages, from the web server, scheduler, or worker, that would indicate a problem, or what the specific problem is. In your airflow.cfg, you've these two configurations to control this behavior: after how much time a new DAGs should be picked up from the filesystem minfileprocessinterval 0 dagdirlistinterval 60 You might have to reload the web-server, scheduler and workers for your new configuration to take effect. What would have been system level settings are now dagbag level so that one system can run multiple, independent settings sets.:param dagfolder: the folder to scan to find DAGs:type dagfolder: unicode:param executor: the executor to use when executing task instances in this DagBag:param includeexamples: whether to include the examples that. Name of the DAG will be your dag id: Data_Processing_1. The only 'supported' way to create a new DAG in Airflow is to make the DAG file appears in the DAG folder and wait until scheduler parses and schedules it. Once you have uploaded DAG code to composer, after few minute a DAG will be created in Airflow. To do that go to composer -> click on DAGs, then upload the DAG code.

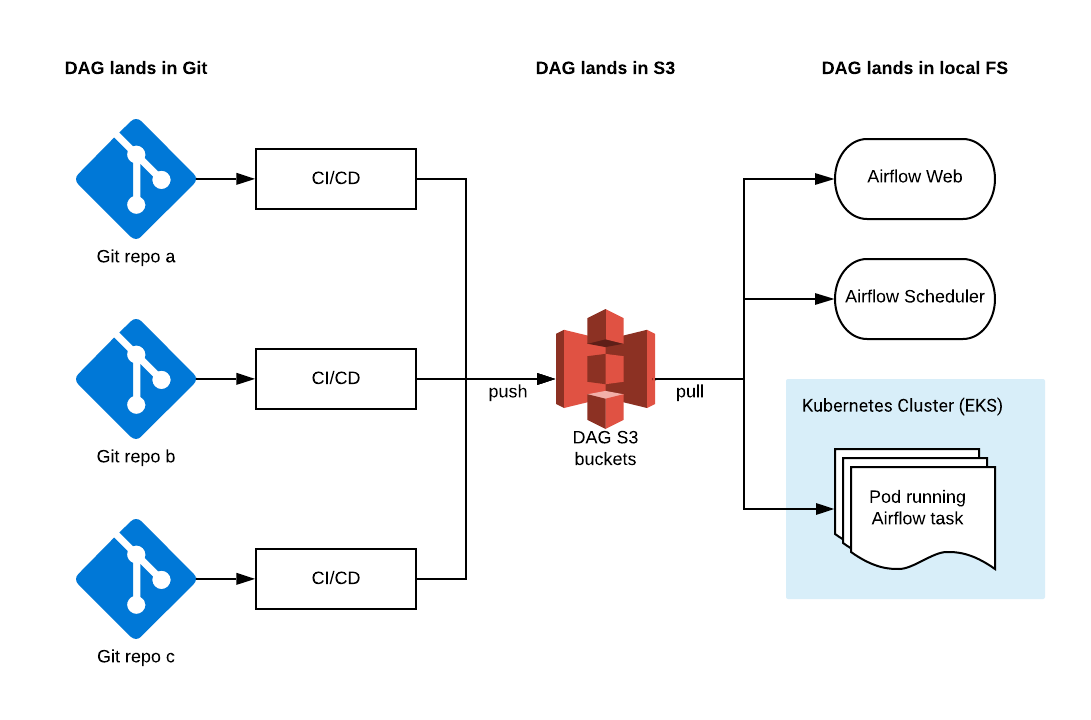

Once you have written your Airflow DAG code, you need to upload it into DAGs folder of GCP composer. It is also triggered whenever a pull request is made for the main branch. The first GitHub Action, testdags.yml, is triggered on a push to the dags directory in the main branch of the repository. Airflow Scheduler is a fantastic utility to. I have ordered tasks like: first show_date will be executed then data_processing_test will be executed. Fork and pull model of collaborative Airflow development used in this post (video only)Types of Tests. DAGs (Directed Acyclic Graphs): Number of DAG processes DAG bag size etc. Setting up dependencies: Set the order in which all tasks should be executed.data_processing_test: Our main data processing code execution.Tasks: Write all tasks for your Airflow workflow.Instantiate a DAG: You can represent a DAG by its name, configure schedule intervals and DAG settings.To know more about all kind of arguments visit official page. I would be very grateful, if you helped me fix it. Now I need to understand where I can create a 'dags' folder where I would put all of my DAGs. It is just a python dictionary, contains all the arguments which is going to apply to all the tasks in your workflow. None of these showed my SampleFile.py on Airflow webserver (I checked dagid in the file, it is alright). DAGs default arguments: Define DAG specific arguments.import packages: Import all required python dependencies for the workflow, just like other python code.These tests are important because they identify errors and catch bugs early on even before your data pipeline is deployed to a live environment. I would be very grateful, if you helped me fix it. Here, I will walk you through unit testing in Airflow. Now I need to understand where I can create a dags folder where I would put all of my DAGs. To write DAG code you just need to remember 5 important steps: None of these showed my SampleFile.py on Airflow webserver (I checked dagid in the file, it is alright).

#Airflow dag bag free#

Line no: 20 is to run your code every day.Īlso Read: Free Certificate: Google Launched Generative AI Course Steps to write an Airflow DAG script Note: You need to mention correct data processing code location in line no: 33. Main='some_folder/code/hello_GCP.py', # This is your data processing code with location Task_id='data_processing_test', #Change the name of the task_id according to task 'start_date': datetime(2021, 1, 28, 10, 15),ĭescription='Sample code to explain GCP automation using Airflow = BashOperator(ĭata_processing_test = DataProcPySparkOperator( Either the dag did not exist or it failed to parse.Īll files are present in the filesystem of the worker.From _operator import DataprocClusterCreateOperator, DataProcPySparkOperator, DataprocClusterDeleteOperatorįrom _rule import TriggerRule The task id is none and thus the worker is not executing the dag and fails.

However, the worker does not seem to load the Dags using my custom file and thus does not set the environment variable. This works perfectly for the webserver and I can see everything in the UI. In the dag file itself I get the environment variable and set the task id correspondingly. Depending on the folder, I am setting an environment variable: """ add additional DAGs folders """ĭag_bag = DagBag(os.path.expanduser(dir)) I am having a custom DAGBag loader as described here to load dags from different folders.

0 kommentar(er)

0 kommentar(er)